Upgrade Palette Management Appliance

This is a Tech Preview feature and is subject to change. Upgrades from a Tech Preview deployment may not be available. Do not use this feature in production workloads.

Follow the instructions to upgrade the Palette Management Appliance using a content bundle. The content bundle is used to upgrade the Palette instance to a chosen target version.

The upgrade process will incur downtime for the Palette management cluster, but your workload clusters will remain operational.

Prerequisites

-

A healthy Palette management cluster where you can access the Local UI of the leader node.

- Verify that your local machine can access the Local UI, as airgapped environments may have strict network policies preventing direct access.

-

If using an external registry, the Palette CLI must be installed on your local machine to upload the content to the external registry. Refer to the Palette CLI guide for installation instructions.

- Ensure your local machine has network access to the external registry server and you have the necessary permissions to push images to the registry.

-

Access to the Artifact Studio to download the content bundle for Palette.

tipIf you do not have access to Artifact Studio, contact your Spectro Cloud representative or open a support ticket.

-

Check that your upgrade path is supported by referring to the Supported Upgrade Paths.

-

If upgrading from version 4.7.15, you must run an additional script before upgrading Palette. This script changes the access mode of the

zotdeployment's PersistentVolumeClaim (PVC) fromRWX(ReadWriteMany) toRWO(ReadWriteOnce), which is required for the upgrade process.Click to expand the instructions for the 4.7.15 script

-

Log in to Local UI of the leader node of your Palette management cluster. For example,

https://<palette-leader-node-ip>:5080. -

From the left main menu, click Cluster.

-

On the Overview tab, within the Environment section, click the link for the Admin Kubeconfig File to download the kubeconfig file.

-

On your local machine, ensure you have

kubectlinstalled and set theKUBECONFIGenvironment variable to point to the file.export KUBECONFIG=/path/to/downloaded/kubeconfig/file -

Issue the following command to check the PVCs in the

zot-systemnamespace.kubectl get pvc --namespace zot-systemNote the PVC name from the output, for example,

zot-pvc. The access mode should beRWX. If it is alreadyRWO, you can skip the remaining steps.Example outputNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

zot-pvc Bound pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 250Gi RWX linstor-lvm-storage <unset> 3h58m -

Issue the following command to check the deployments in the

zot-systemnamespace.kubectl get deploy --namespace zot-systemNote the deployment name from the output, for example,

zot.Example outputNAME READY UP-TO-DATE AVAILABLE AGE

zot 1/1 1 1 3h59m -

Use the following command to create a script named

change-pvc-access-mode.sh.The script safely changes the access mode of a Kubernetes

PersistentVolumeClaim(PVC) without losing data, by temporarily deleting and re-creating the PVC while keeping the underlyingPersistentVolume(PV) intact. It pauses your app during the change, then resumes it. For version 4.7.15, this is required for thezotdeployment before upgrading.cat > change-pvc-access-mode.sh <<'SCRIPT'

#!/bin/bash

# Script to change PVC access mode while preserving data in LINSTOR/Piraeus

# Usage: ./change-pvc-access-mode.sh <namespace> <pvc-name> <deployment-name> <new-access-mode>

# Example: ./change-pvc-access-mode.sh zot-system zot-pvc zot ReadWriteOnce

set -e

# Colors for output

RED='\033[0;31m'

GREEN='\033[0;32m'

YELLOW='\033[1;33m'

NC='\033[0m' # No Color

# Check arguments

if [ "$#" -ne 4 ]; then

echo -e "${RED}Error: Invalid number of arguments${NC}"

echo "Usage: $0 <namespace> <pvc-name> <deployment-name> <new-access-mode>"

echo "Access modes: ReadWriteOnce, ReadWriteMany, ReadOnlyMany"

exit 1

fi

NAMESPACE="$1"

PVC_NAME="$2"

DEPLOYMENT_NAME="$3"

NEW_ACCESS_MODE="$4"

# Validate access mode

if [[ ! "$NEW_ACCESS_MODE" =~ ^(ReadWriteOnce|ReadWriteMany|ReadOnlyMany)$ ]]; then

echo -e "${RED}Error: Invalid access mode. Must be one of: ReadWriteOnce, ReadWriteMany, ReadOnlyMany${NC}"

exit 1

fi

echo -e "${YELLOW}=== PVC Access Mode Migration Script ===${NC}"

echo "Namespace: $NAMESPACE"

echo "PVC: $PVC_NAME"

echo "Deployment: $DEPLOYMENT_NAME"

echo "New Access Mode: $NEW_ACCESS_MODE"

echo ""

# Step 1: Get PV name

echo -e "${GREEN}[1/9] Getting PV name...${NC}"

PV_NAME=$(kubectl get pvc "$PVC_NAME" -n "$NAMESPACE" -o jsonpath='{.spec.volumeName}')

if [ -z "$PV_NAME" ]; then

echo -e "${RED}Error: Could not find PV for PVC $PVC_NAME${NC}"

exit 1

fi

echo "PV Name: $PV_NAME"

# Step 2: Backup current configuration

echo -e "${GREEN}[2/9] Backing up current PVC and PV configuration...${NC}"

kubectl get pvc "$PVC_NAME" -n "$NAMESPACE" -o yaml > "${PVC_NAME}-backup-$(date +%Y%m%d-%H%M%S).yaml"

kubectl get pv "$PV_NAME" -o yaml > "${PV_NAME}-backup-$(date +%Y%m%d-%H%M%S).yaml"

echo "Backups created in current directory"

# Step 3: Set PV reclaim policy to Retain

echo -e "${GREEN}[3/9] Setting PV reclaim policy to Retain...${NC}"

CURRENT_POLICY=$(kubectl get pv "$PV_NAME" -o jsonpath='{.spec.persistentVolumeReclaimPolicy}')

echo "Current reclaim policy: $CURRENT_POLICY"

if [ "$CURRENT_POLICY" != "Retain" ]; then

kubectl patch pv "$PV_NAME" -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

echo "Reclaim policy changed to Retain"

else

echo "Already set to Retain"

fi

# Step 4: Get current replica count

echo -e "${GREEN}[4/9] Getting current deployment replica count...${NC}"

REPLICA_COUNT=$(kubectl get deployment "$DEPLOYMENT_NAME" -n "$NAMESPACE" -o jsonpath='{.spec.replicas}')

echo "Current replicas: $REPLICA_COUNT"

# Step 5: Scale down deployment

echo -e "${GREEN}[5/9] Scaling down deployment to 0...${NC}"

kubectl scale deployment "$DEPLOYMENT_NAME" -n "$NAMESPACE" --replicas=0

echo "Waiting for pods to terminate..."

kubectl wait --for=delete pod -l app="$DEPLOYMENT_NAME" -n "$NAMESPACE" --timeout=120s 2>/dev/null || true

sleep 5

# Step 6: Delete PVC

echo -e "${GREEN}[6/9] Deleting PVC (data preserved in PV)...${NC}"

kubectl delete pvc "$PVC_NAME" -n "$NAMESPACE"

echo "Waiting for PV to be Released..."

sleep 5

PV_STATUS=$(kubectl get pv "$PV_NAME" -o jsonpath='{.status.phase}')

echo "PV Status: $PV_STATUS"

# Step 7: Remove claimRef and update access mode

echo -e "${GREEN}[7/9] Removing claimRef from PV...${NC}"

kubectl patch pv "$PV_NAME" --type json -p='[{"op": "remove", "path": "/spec/claimRef"}]'

echo -e "${GREEN}[7/9] Updating PV access mode to $NEW_ACCESS_MODE...${NC}"

kubectl patch pv "$PV_NAME" -p "{\"spec\":{\"accessModes\":[\"$NEW_ACCESS_MODE\"]}}"

PV_STATUS=$(kubectl get pv "$PV_NAME" -o jsonpath='{.status.phase}')

echo "PV Status: $PV_STATUS"

# Step 8: Create new PVC

echo -e "${GREEN}[8/9] Creating new PVC with updated access mode...${NC}"

# Get original PVC details

STORAGE_SIZE=$(kubectl get pv "$PV_NAME" -o jsonpath='{.spec.capacity.storage}')

STORAGE_CLASS=$(kubectl get pv "$PV_NAME" -o jsonpath='{.spec.storageClassName}')

VOLUME_MODE=$(kubectl get pv "$PV_NAME" -o jsonpath='{.spec.volumeMode}')

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: $PVC_NAME

namespace: $NAMESPACE

labels:

app.kubernetes.io/managed-by: Helm

spec:

accessModes:

- $NEW_ACCESS_MODE

resources:

requests:

storage: $STORAGE_SIZE

storageClassName: $STORAGE_CLASS

volumeMode: $VOLUME_MODE

volumeName: $PV_NAME

EOF

echo "Waiting for PVC to bind..."

sleep 5

kubectl get pvc "$PVC_NAME" -n "$NAMESPACE"

# Step 9: Scale deployment back up

echo -e "${GREEN}[9/9] Scaling deployment back to $REPLICA_COUNT replicas...${NC}"

kubectl scale deployment "$DEPLOYMENT_NAME" -n "$NAMESPACE" --replicas="$REPLICA_COUNT"

echo ""

echo -e "${GREEN}=== Migration Complete ===${NC}"

echo ""

echo "Verifying final state..."

kubectl get pvc "$PVC_NAME" -n "$NAMESPACE"

echo ""

kubectl get pods -n "$NAMESPACE"

echo ""

echo -e "${YELLOW}Note: Wait for pods to be Running and verify your application is working correctly${NC}"

echo -e "${YELLOW}Backup files created: ${PVC_NAME}-backup-*.yaml and ${PV_NAME}-backup-*.yaml${NC}"

SCRIPT -

Make the script executable.

chmod u+x change-pvc-access-mode.sh -

Execute the script with the following parameters. Replace

<pvc-name>and<deployment-name>with the values noted from steps 5 and 6../change-pvc-access-mode.sh zot-system <pvc-name> <deployment-name> ReadWriteOnceExample command./change-pvc-access-mode.sh zot-system zot-pvc zot ReadWriteOnceExample output=== PVC Access Mode Migration Script ===

Namespace: zot-system

PVC: zot-pvc

Deployment: zot

New Access Mode: ReadWriteOnce

[1/9] Getting PV name...

PV Name: pvc-6d603d91-d5f6-459a-b600-0a699cbb4936

[2/9] Backing up current PVC and PV configuration...

Backups created in current directory

[3/9] Setting PV reclaim policy to Retain...

Current reclaim policy: Delete

persistentvolume/pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 patched

Reclaim policy changed to Retain

[4/9] Getting current deployment replica count...

Current replicas: 1

[5/9] Scaling down deployment to 0...

deployment.apps/zot scaled

Waiting for pods to terminate...

[6/9] Deleting PVC (data preserved in PV)...

persistentvolumeclaim "zot-pvc" deleted from zot-system namespace

Waiting for PV to be Released...

PV Status: Released

[7/9] Removing claimRef from PV...

persistentvolume/pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 patched

[7/9] Updating PV access mode to ReadWriteOnce...

persistentvolume/pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 patched

PV Status: Available

[8/9] Creating new PVC with updated access mode...

persistentvolumeclaim/zot-pvc created

Waiting for PVC to bind...

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

zot-pvc Pending pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 0 linstor-lvm-storage <unset> 5s

[9/9] Scaling deployment back to 1 replicas...

deployment.apps/zot scaled

=== Migration Complete ===

Verifying final state...

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

zot-pvc Pending pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 0 linstor-lvm-storage <unset> 7s

NAME READY STATUS RESTARTS AGE

zot-c96cb7b-hspd2 0/1 Pending 0 1s

Note: Wait for pods to be Running and verify your application is working correctly

Backup files created: zot-pvc-backup-*.yaml and pvc-6d603d91-d5f6-459a-b600-0a699cbb4936-backup-*.yaml -

Verify that the PVC status is

Boundand the deployment pods are in theRunningstate before proceeding with the upgrade.kubectl get pvc --namespace zot-systemExample outputNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

zot-pvc Bound pvc-6d603d91-d5f6-459a-b600-0a699cbb4936 250Gi RWO linstor-lvm-storage <unset> 5m30skubectl get pods --namespace zot-systemExample outputNAME READY STATUS RESTARTS AGE

zot-c96cb7b-hspd2 1/1 Running 0 5m3s

-

-

If upgrading from version 4.7.27, you must run an additional script before upgrading Palette. This script ensures the

linstor-lvm-storageStorageClass has correct parameters and safely recreates the PVC while preserving data.This script is not required for single node deployments as it relates to high availability (HA) configurations only.

Click to expand the instructions for the 4.7.27 script

-

Log in to Local UI of the leader node of your Palette management cluster. For example,

https://<palette-leader-node-ip>:5080. -

From the left main menu, click Cluster.

-

On the Overview tab, within the Environment section, click the link for the Admin Kubeconfig File to download the kubeconfig file.

-

On your local machine, ensure you have

kubectlinstalled and set theKUBECONFIGenvironment variable to point to the file.export KUBECONFIG=/path/to/downloaded/kubeconfig/file -

Use the following command to create a script named

recreate.sh.The script ensures that the

linstor-lvm-storageStorageClass has the correct parameters (resourceGroup: lvm-haandplacementCount: 3). If the StorageClass does not exist or has different parameters, it recreates it. Then, it safely recreates a specified PVC to bind to a new PV while preserving data, handling deployments withvolumeBindingMode=WaitForFirstConsumerby scaling down the deployment first.cat > recreate.sh <<'SCRIPT'

#!/bin/bash

# Recreate a PVC with updated StorageClass configuration

# Ensures the linstor-lvm-storage StorageClass exists with correct parameters,

# then recreates the specified PVC which may bind to a new PV.

# Safely handles volumeBindingMode=WaitForFirstConsumer by scaling down the deployment first.

set -euo pipefail

NAMESPACE=${1:-zot-system}

PVC_NAME=${2:-zot-pvc}

DEPLOY_NAME=${3:-zot}

#!/bin/bash

set -e

SC_NAME="linstor-lvm-storage"

echo "Checking for existing StorageClass '$SC_NAME'..."

if kubectl get storageclass $SC_NAME >/dev/null 2>&1; then

echo "StorageClass '$SC_NAME' exists. Checking parameters..."

CURRENT_RG=$(kubectl get storageclass $SC_NAME -o jsonpath='{.parameters.resourceGroup}')

CURRENT_PC=$(kubectl get storageclass $SC_NAME -o jsonpath='{.parameters.placementCount}')

if [[ "$CURRENT_RG" == "lvm-ha" && "$CURRENT_PC" == "3" ]]; then

echo "✅ StorageClass already has resourceGroup='lvm-ha' and placementCount='3'. Skipping re-creation."

else

echo "StorageClass parameters differ (resourceGroup=$CURRENT_RG, placementCount=$CURRENT_PC). Recreating..."

kubectl delete storageclass $SC_NAME

echo "Recreating StorageClass '$SC_NAME'..."

cat <<EOF | kubectl apply -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: $SC_NAME

annotations:

storageclass.kubernetes.io/is-default-class: "false"

helm.sh/hook: post-install,post-upgrade

provisioner: linstor.csi.linbit.com

parameters:

placementCount: "3"

resourceGroup: "lvm-ha"

storagePool: "lvm-thin"

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

allowVolumeExpansion: true

EOF

echo "✅ StorageClass '$SC_NAME' updated."

fi

else

echo "StorageClass not found. Creating new one..."

cat <<EOF | kubectl apply -f -

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: $SC_NAME

annotations:

storageclass.kubernetes.io/is-default-class: "false"

helm.sh/hook: post-install,post-upgrade

provisioner: linstor.csi.linbit.com

parameters:

placementCount: "3"

resourceGroup: "lvm-ha"

storagePool: "lvm-thin"

reclaimPolicy: Delete

volumeBindingMode: WaitForFirstConsumer

allowVolumeExpansion: true

EOF

echo "✅ StorageClass '$SC_NAME' created."

fi

echo "Getting PV bound to PVC '${PVC_NAME}' in namespace '${NAMESPACE}'..."

PV_NAME=$(kubectl get pvc -n "${NAMESPACE}" "${PVC_NAME}" -o jsonpath='{.spec.volumeName}' 2>/dev/null || true)

if [ -z "${PV_NAME}" ]; then

echo "❌ PVC '${PVC_NAME}' not found or not bound to any PV."

exit 1

fi

echo "Found PV: ${PV_NAME}"

echo "Scaling down deployment '${DEPLOY_NAME}' to release PVC..."

kubectl scale deploy "${DEPLOY_NAME}" -n "${NAMESPACE}" --replicas=0 || true

echo "Deleting PVC '${PVC_NAME}' (PV data will be retained)..."

kubectl delete pvc -n "${NAMESPACE}" "${PVC_NAME}" --ignore-not-found

STORAGE=$(kubectl get pv "${PV_NAME}" -o jsonpath='{.spec.capacity.storage}')

ACCESSMODES=$(kubectl get pv "${PV_NAME}" -o jsonpath='{.spec.accessModes[0]}')

echo "Recreating PVC '${PVC_NAME}' bound to existing PV '${PV_NAME}'..."

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ${PVC_NAME}

namespace: ${NAMESPACE}

spec:

accessModes:

- ${ACCESSMODES}

storageClassName: linstor-lvm-storage

resources:

requests:

storage: ${STORAGE}

EOF

echo "Scaling deployment '${DEPLOY_NAME}' back up..."

kubectl scale deploy "${DEPLOY_NAME}" -n "${NAMESPACE}" --replicas=1 || true

echo "Waiting for PVC '${PVC_NAME}' to rebind..."

for i in {1..30}; do

STATUS=$(kubectl get pvc -n "${NAMESPACE}" "${PVC_NAME}" -o jsonpath='{.status.phase}' 2>/dev/null || echo "")

if [[ "$STATUS" == "Bound" ]]; then

echo "✅ PVC successfully bound."

break

fi

sleep 2

done

# Get the new PV name after PVC is bound

NEW_PV_NAME=$(kubectl get pvc -n "${NAMESPACE}" "${PVC_NAME}" -o jsonpath='{.spec.volumeName}' 2>/dev/null || echo "")

if [ -z "${NEW_PV_NAME}" ]; then

echo "Warning: Could not determine new PV name."

NEW_PV_NAME="<check manually>"

fi

echo "Done!"

echo "✅ PVC '${PVC_NAME}' is bound to PV '${NEW_PV_NAME}' and data preserved."

echo "

Verify with:

kubectl get pvc -n ${NAMESPACE} ${PVC_NAME}

kubectl get pv ${NEW_PV_NAME}

"

SCRIPT -

Make the script executable.

chmod u+x recreate.sh -

Use the following command to execute the script.

./recreate.shExample outputChecking for existing StorageClass 'linstor-lvm-storage'...

StorageClass 'linstor-lvm-storage' exists. Checking parameters...

✅ StorageClass already has resourceGroup='lvm-ha' and placementCount='3'. Skipping re-creation.

Getting PV bound to PVC 'zot-pvc' in namespace 'zot-system'...

Found PV: pvc-3b7a8f9c-1d2e-4f5a-9b0c-1a2b3c4d5e6f

Scaling down deployment 'zot' to release PVC...

deployment.apps/zot scaled

Deleting PVC 'zot-pvc' (PV data will be retained)...

persistentvolumeclaim "zot-pvc" deleted

Recreating PVC 'zot-pvc' bound to existing PV 'pvc-3b7a8f9c-1d2e-4f5a-9b0c-1a2b3c4d5e6f'...

persistentvolumeclaim/zot-pvc created

Scaling deployment 'zot' back up...

deployment.apps/zot scaled

Waiting for PVC 'zot-pvc' to rebind...

✅ PVC successfully bound.

Done!

✅ PVC 'zot-pvc' is bound to PV 'pvc-7e9d2a10-4a6b-431f-b9e7-8c2d1f3a5b6c' and data preserved.

Verify with:

kubectl get pvc -n zot-system zot-pvc

kubectl get pv pvc-7e9d2a10-4a6b-431f-b9e7-8c2d1f3a5b6c -

Verify that the PVC and PV status is

Bound.kubectl get pvc --namespace zot-system zot-pvcExample outputNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

zot-pvc Bound pvc-7e9d2a10-4a6b-431f-b9e7-8c2d1f3a5b6c 300Gi RWO linstor-lvm-storage <unset> 5m30skubectl get pv pvc-7e9d2a10-4a6b-431f-b9e7-8c2d1f3a5b6cExample outputNAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-7e9d2a10-4a6b-431f-b9e7-8c2d1f3a5b6c 300Gi RWO Delete Bound zot-system/zot-pvc linstor-lvm-storage 5m30s

-

Upgrade Palette

- Internal Zot Registry

- External Registry

-

Navigate to the Artifact Studio through a web browser and log in. Under Install Palette Enterprise, click on the drop-down menu and select the version you want to upgrade your Palette Management Appliance to.

-

Click Show Artifacts to display the Palette Enterprise Artifacts pop-up window. Click the Download button for the Content bundle (including Ubuntu).

-

Wait until the content bundle is downloaded to your local machine. The bundle is downloaded in

.tar.zstformat alongside a signature file insig.binformat.tipRefer to the Artifact Studio guide for detailed guidance on how to verify the integrity of downloaded files using the provided signature file.

-

Log in to the Local UI of the leader host of the Palette management cluster. By default, Local UI is accessible at

https://<node-ip>:5080. Replace<node-ip>with the IP address of the leader host. -

From the left main menu, click Content.

-

Click Actions in the top right and select Upload Content from the drop-down menu.

-

Click the Upload icon to open the file selection dialog and select the content bundle file from your local machine. Alternatively, you can drag and drop the file into the upload area.

The upload process starts automatically once the file is selected. You can monitor the upload progress in the Upload Content dialog.

Wait for the File(s) uploaded successfully confirmation message or the green check mark to appear next to the upload progress bar.

-

On the Content page, wait for the content to finish syncing. This is indicated by the Syncing content: (N) items are pending banner that appears to the left of disk usage. The banner will disappear once the sync is complete. This can take several minutes depending on the size of the content bundle and your internal network speed.

-

From the left main menu, click Cluster and select the Configuration tab.

-

Click the Update drop-down menu and select Review Changes.

warningEnsure that the configured Zot password matches the password that you used when installing Palette Management Appliance. You cannot access the Zot registry post-upgrade if the passwords do not match.

If you have forgotten your Zot password, you can connect to your Kubernetes cluster and retrieve it from the Zot secret.

-

Review the changes for each profile carefully and ensure you are satisfied with the proposed updates. There may be changes to the profiles between versions that include the addition or removal of properties.

warningSome upgrade paths require you to re-enter the configuration values you provided during the initial Palette installation. This includes the OCI Pack Registry Password and any other non-default settings.

Click Confirm Changes once satisfied.

-

Click Update to start the upgrade process.

During the upgrade process, the Palette system and tenant consoles will be unavailable, and a warning message will be displayed when attempting to log in. You can monitor the upgrade progress in the Overview tab on the Cluster page.

When using an external registry, you must upload the content bundle to both the external registry and the internal Zot registry as they both need to have the content for the upgrade process. The following steps include instructions for uploading the content bundle to both registries.

-

Navigate to the Artifact Studio through a web browser and log in. Under Install Palette Enterprise, click on the drop-down menu and select the version you want to upgrade your Palette Management Appliance to.

-

Click Show Artifacts to display the Palette Enterprise Artifacts pop-up window. Click the Download button for the Content bundle (including Ubuntu).

-

Wait until the content bundle is downloaded to your local machine. The bundle is downloaded in

.tar.zstformat alongside a signature file insig.binformat.tipRefer to the Artifact Studio guide for detailed guidance on how to verify the integrity of downloaded files using the provided signature file.

-

Open a terminal on your local machine and navigate to the directory where the content bundle file is located.

-

Use the Palette CLI to log in to your external registry. Replace

<registry-dns-or-ip>with the DNS/IP address of your registry,<registry-port>with the port number of your registry (if applicable),<username>with your username, and<password>with your password.palette content registry-login \

--registry https://<registry-dns-or-ip>:<registry-port> \

--username <username> \

--password <password> -

Upload the file to your external registry using the Palette CLI. Replace

<content-bundle-zst>with your downloaded content bundle file,<registry-dns-or-ip>with the DNS/IP address of your registry, and<registry-port>with the port number of your registry (if applicable). If you have changed the base content path from the default, replacespectro-contentwith the correct content path.If you are using regular TLS certificates, custom TLS certificates, or choosing to skip TLS, use the appropriate flags as shown in the following examples.

- Regular TLS Certificate

- Custom TLS Certificate

- Skip TLS

palette content push \

--registry <registry-dns-or-ip>:<registry-port>/spectro-content \

--file <content-bundle-zst>palette content push \

--registry <registry-dns-or-ip>:<registry-port>/spectro-content \

--file <content-bundle-zst> \

--ca-cert <path-to-ca-cert> \

--tls-cert <path-to-tls-cert> \

--tls-key <path-to-tls-key>palette content push \

--registry <registry-dns-or-ip>:<registry-port>/spectro-content \

--file <content-bundle-zst> \

--insecureThe following example output is expected when an upload is successful.

Example output...

INFO[0366] successfully copied all artifacts from local bundle /root/tmp/bundle-extract/palette-enterprise-appliance-4.7.9.tar to remote bundle external.registry.com/spectro-content/bundle-definition:palette-enterprise-appliance-4.7.9

-----------------------------

Push Summary

-----------------------------

local bundle palette-enterprise-appliance-4.7.9 pushed to external.registry.com/spectro-contenttipBe aware of the timeout period for the authentication token. If the authentication token expires, you will need to re-authenticate to the OCI registry and restart the upload process.

-

Log in to the Local UI of the leader host of the Palette management cluster. By default, Local UI is accessible at

https://<node-ip>:5080. Replace<node-ip>with the IP address of the leader host. -

From the left main menu, click Content.

-

Click Actions in the top right and select Upload Content from the drop-down menu.

-

Click the Upload icon to open the file selection dialog and select the content bundle file from your local machine. Alternatively, you can drag and drop the file into the upload area.

The upload process starts automatically once the file is selected. You can monitor the upload progress in the Upload Content dialog.

Wait for the File(s) uploaded successfully confirmation message or the green check mark to appear next to the upload progress bar.

-

On the Content page, wait for the content to finish syncing. This is indicated by the Syncing content: (N) items are pending banner that appears to the left of disk usage. The banner will disappear once the sync is complete. This can take several minutes depending on the size of the content bundle and your internal network speed.

-

From the left main menu, click Cluster and select the Configuration tab.

-

Click the Update drop-down menu and select Review Changes.

-

Review the changes for each profile carefully and ensure you are satisfied with the proposed updates. There may be changes to the profiles between versions that include the addition or removal of properties.

warningSome upgrade paths require you to re-enter the configuration values you provided during the initial Palette installation. This includes the OCI Pack Registry Password and any other non-default settings.

Click Confirm Changes once satisfied.

-

Click Update to start the upgrade process.

During the upgrade process, the Palette system and tenant consoles will be unavailable, and a warning message will be displayed when attempting to log in. You can monitor the upgrade progress in the Overview tab on the Cluster page.

Validate

-

Log in to the Local UI of the leader host of the Palette management cluster. By default, Local UI is accessible at

https://<node-ip>:5080. Replace<node-ip>with the IP address of the leader host. -

From the left main menu, click Cluster.

-

Check that the

palette-mgmt-planepack displays the upgraded version number and is in a Running status. -

Verify that you can log in to the Palette system console and no warning message is displayed.

-

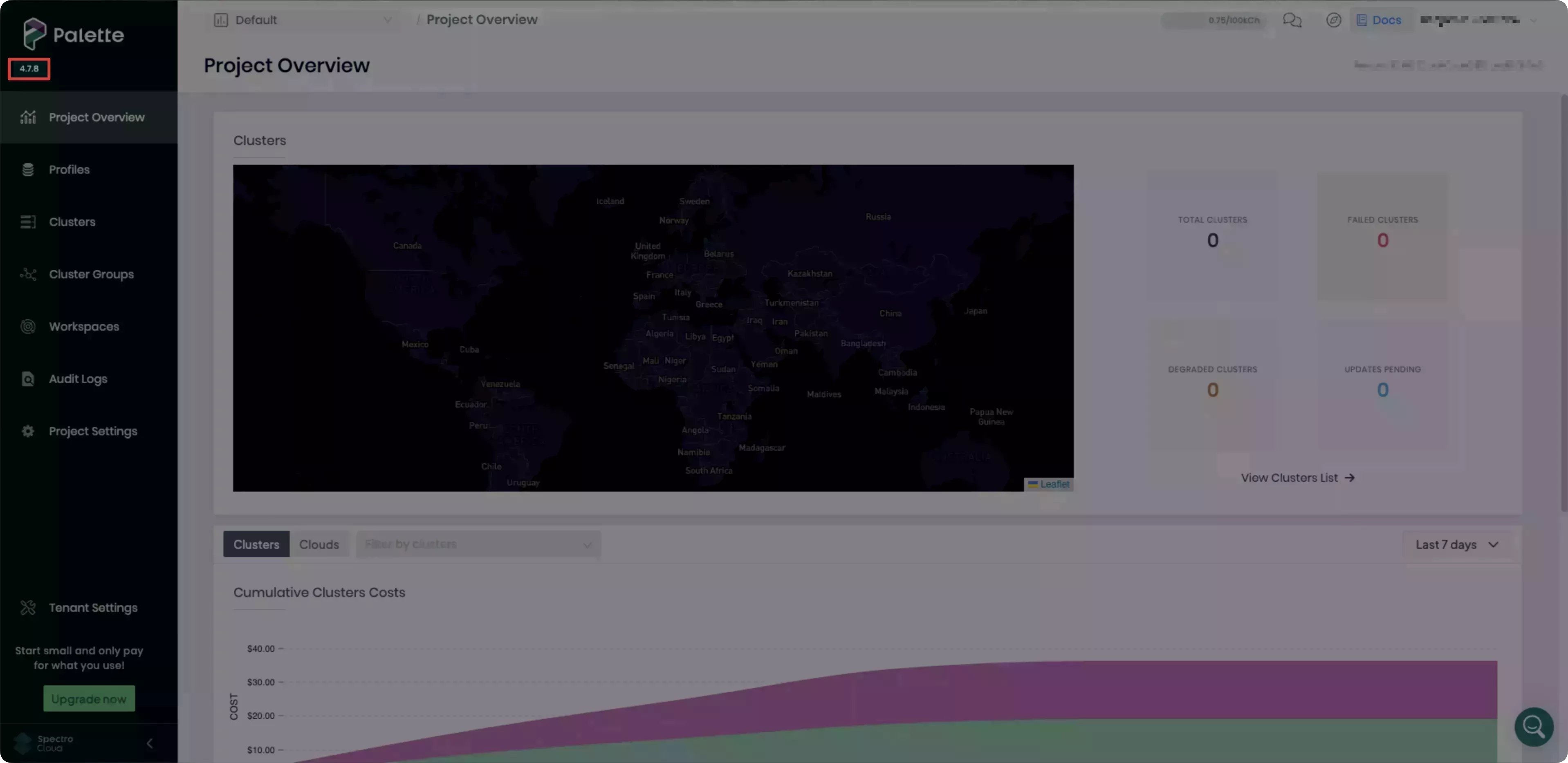

If you have configured a tenant, log in to the tenant console and verify that Palette displays the correct version number.